Understanding the key differences between LXC and Docker

Linux containers (LXC) has the potential to transform how we run and scale applications. Container technology is not new, mainstream support in the vanilla kernel however is, paving the way for widespread adoption.

FreeBSD has Jails, Solaris has Zones and there are other container technologies like OpenVZ and Linux VServer that depend on custom kernels impeding adoption.

There is an excellent presentation on the history and current state of Linux containers by Dr. Rami Rosen which provides fantastic perspective and context.

What's the fuss?

Containers isolate and encapsulate your application workloads from the host system. Think of a container as an OS within your host OS in which you can install and run applications, and for all practical purposes behaves like an virtual machine.

This emulation is enabled by the Linux kernel and the LXC project which provides container OS templates for various distributions and user facing applications for container life cycle management.

Portability

Containers decouple your applications from the host OS, abstracts it and makes it portable across any system that supports LXC. That this is useful would be a wild understatement. Users can have a clean and minimal base Linux OS and run everything else let's say a lamp stack in a container.

Because apps and workloads are isolated users can run multiple versions of PHP, Python, Ruby, Apache happily coexisting tucked away in their containers, and get cloud like flexibility of instances and workloads that can be easily moved across systems and cloned, backed up and deployed rapidly.

But doesn't virtualization do this?

Yes, but at a performance penalty and without the same flexibility. Containers do not emulate a hardware layer and use cgroups and namespaces in the Linux kernel to create lightweight virtualized OS environments with near bare-metal speeds. Since you are not virtualizing storage a container doesn't care about underlying storage or file systems and simply operates wherever you put it.

This fundamentally changes the way we virtualize workloads and application, as containers are simply faster, more portable and can scale more efficiently than hardware virtualization, with the exception of workloads that require an OS other than Linux or a specific Linux kernel version.

Is it game over for VMWare then?

Not so fast! Virtualization is mature with extensive tooling and ecosystems to support its deployment across various environments. And for workloads that require a non Linux OS or a specific kernel virtualization remains the only way.

LXC owes its origin to the development of cgroups and namespaces in the Linux kernel to support lightweight virtualized OS environments (containers) and some early work by Daniel Lezcano and Serge Hallyn dating from 2009 at IBM

The LXC Project is supported by Ubuntu and powers Ubuntu Juju. The LXC project provides base OS container templates and a comprehensive set of tools for container lifecycle management. It is currently led by a 2 member team, Stephane Graber and Serge Hallyn from Ubuntu.

LXC is actively developed but not well documented beyond Ubuntu. Cross distribution documentation is lacking, things usually work well in Ubuntu first, leaving to all round frustration and hair pulling for users of other distributions.

There is a lot of confusion, outdated and often just misleading information online. Add Docker to the mix which has aggressively marketed itself to the wider community (Ubuntu, why so quiet?) and the volume of information and scope for confusion has widened.

What is LXC, how does Docker relate to LXC, how do they differ? Is Docker a user friendly front end to LXC? Does LXC simply refer to Linux kernel level features that is left to user land programs like Docker to implement?

LXC maintainer Stephane Greber excellent 10 part Blog series on LXC 1.0 and our LXC Getting started guide answers some of those questions with clarity and are a perfect introduction to LXC and its capabilities.

How they differ

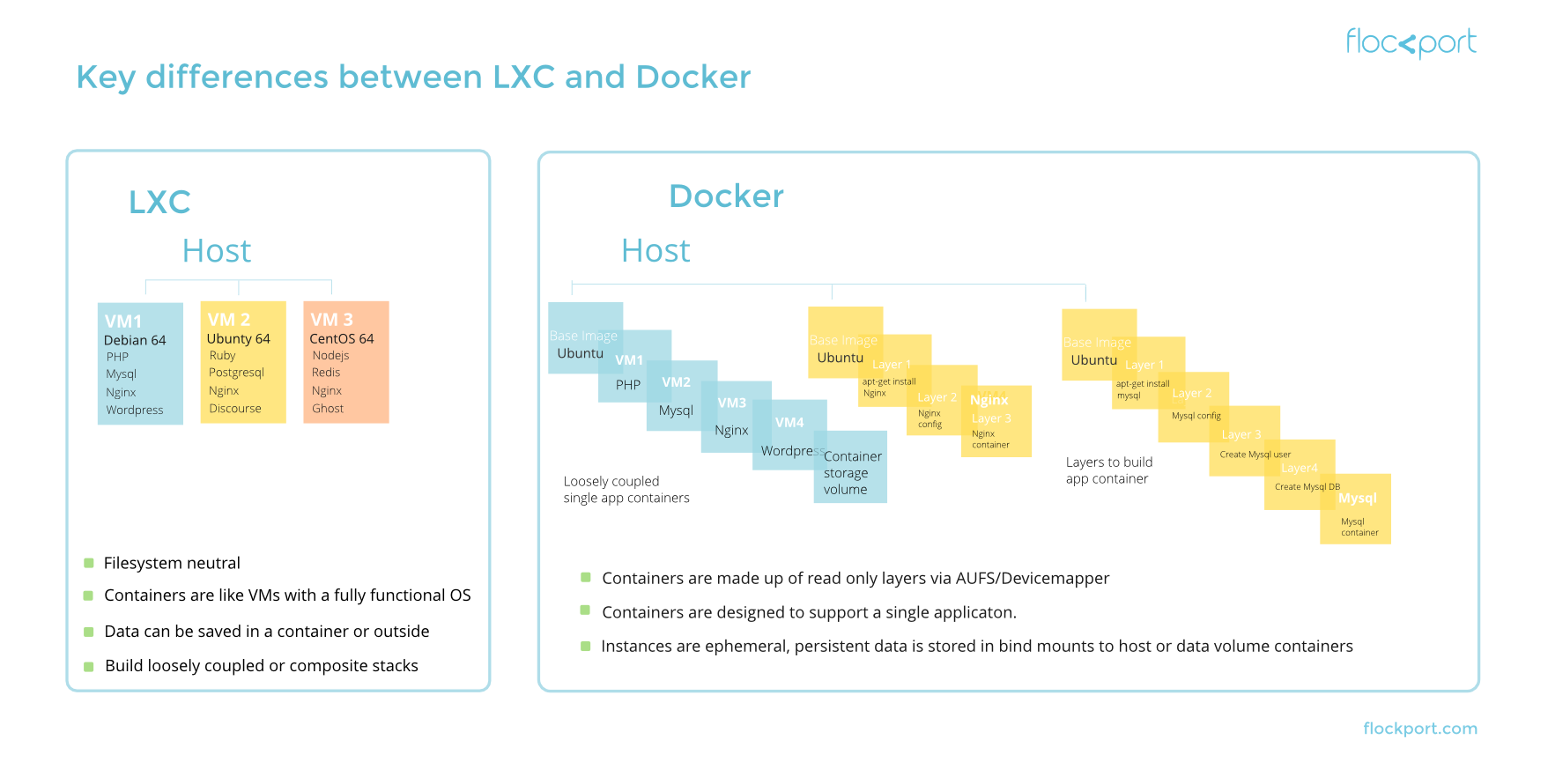

LXC is a container technology which gives you lightweight Linux containers and Docker is a single application virtualization engine based on containers. They may sound similar but are completely different.

Unlike LXC containers Docker containers do no behave like lightweight VMs and cannot be treated as such. Docker containers are restricted to a single application by design.

You can log in to your LXC container, treat it like an OS and install your application and services and it will work as expected. You can't do that in a Docker container. The Docker base OS template is pared down to a single app environment and does not have a proper init or support things like services, daemons, syslog, cron or running multiple applications.

Docker

Docker is a project by dotCloud now Docker Inc released in March 2013, based on the LXC project to build single application containers. Docker has now developed their own implementation libcontainer that uses kernel container capabilities directly.

Its useful to think of Docker as a specific use case of Linux containers to build loosely coupled 'frozen in state' applications as services.

This is an interesting use case of containers, but this pursuit of 'frozen in state apps' at the container level introduces inherent complexity to containers and makes it attractive for developers and experts familiar with enterprise concepts like SOA, service discovery, registries and statelessness.

Docker introduces a number of interesting changes and concepts to containers. Docker encourages you to build loosely coupled apps, and thinking of a 'container as an app' - the docker base container OS template is basically designed to support this one app. This is not a guide to Docker or LXC. Please see the linked LXC guides above or visit the Docker website to get a basic perspective.

- Layered containers

Docker uses AUFS/devicemapper/btrfs to build containers using read only layers of file systems. A docker container is made up of read only layers which when committed becomes Docker images or containers. An image is a container which comprises layers used to build your application. When a docker container runs only the top layer is read write, all the layers beneath are read only, and the top layer is transient data until it is committed to a new layer. There is an inherent complexity and performance penalty using overlay or layers of read only file systems. - Single application containers

Docker restricts the container to a single process only. The default docker baseimage OS template is not designed to support multiple applications, processes or services like init, cron, syslog, ssh etc. As you can imagine this introduces a certain amount of complexity and has huge implications for day to day usage scenarios. Since current architectures, applications and services are designed to operate in normal multi process OS environments you would need to find a Docker way to do things or use tools that support Docker. When it comes to applications for a LAMP container you would need to build 3 containers that consume services from each other, a PHP container, an Apache container and a MySQL container. Can you build all 3 in one container? You can, but there is no way to run php-fpm, apache and mysqld in the same container without a shell script or install a separate process manager like runit or supervisor. - Separate states

Docker separates container storage from the application, you mount persistent data outside the container in the host with bind mounts or in data volume only containers. Unless your use case is only containers with non persistent data this has the potential to make Docker containers less portable. - Registry

Docker provides a public and private registry where users can push and pull images from. Images are read only layers used to compose the application. This makes it easy for users to share and distribute applications.

Widening gap with LXC

LXC features need to be reimplemented by the docker team to be available in Docker, for instance LXC now supports unprivileged containers which let's non root users create and deploy containers. Docker does not support this yet. And with the recent announcement of libcontainer the capabilities of the 2 will keep presumably grow apart.

There is no right or wrong way to run containers. It's up to users, the docker approach is unique and will necessitate custom approaches at every stage to find the docker way to accomplish tasks from installing and running applications to scaling containers.

Just taking one example, in clustered systems you run agents or SSH to monitor health, run checks, respond to events, orchestrate actions, update configuration on the fly, eg security updates. You can't run agents or ssh into docker containers which would become a second application in the container.

Configuration changes to system files like /etc/hosts are involved and application configuration changes need to be written to a new layer and committed.

If you are trying to build a read only app that is 'frozen in state' as Docker is designed for, the trade off in complexity and constraints make sense. For other use cases these limitations can be a significant challenge to manage.

The way ahead

Statelessness is not an easy problem, usually applications have to be designed to be stateless by keeping state on the client side, on the network or it's upto devops to replicate state and keeps it resilient. If the application writes to the file system and most applications do the state problem simply shifts outside the container to the user and the problem now exists outside the container.

Virtualization enabled the cloud by allowing OS and apps to be frozen in state transforming them into instances that can be easily moved around. LXC adds more even speed, flexibility and portability expanding possibilities.

Docker's use of layers of frozen state mainly to allow reuse and composition while interesting, extracts a tradeoff in complexity and performance. The constraint of single applications narrows its use case further.

You can build composite or single app containers in LXC, use a btrfs file system to easily run hundreds of containers cloned from a single subvolume, solving a complex problem of space transparently at the file system level, use clones to freeze or distribute state, and CMS tools to manage it. LXC gives you a wide range of capabilities and freedom to architect and run your containers.

Here is a sneak peek of LXC

All efforts have been made to ensure the article is accurate. Please let us know in the comments section, or contact us if you feel there are any inaccuracies.

Statelessness and the Swiss engineer

LXC, Docker and now LXD - Making sense of the rapidly evolving container ecosystem

Stay Updated on Flockport News

Leave a Comment

You must be logged in to post a comment.

Historically, the Docker community has insisted that a single daemon be run within Docker containers. That said, there has been SupervisorD for quite some time. With the advent of RHEL7, there is a lot of work for running Systemd within a Docker container. This probably changes things quite a bit:

http://developerblog.redhat.com/2014/05/05/running-systemd-within-docker-container/